Kafka Load Balancing at Agoda, AI Competitions to Participate and More

🎬 S2:E05 Lag, Load, Live.

Hey, TechFlixers!

Welcome back to another episode of TechFlix Weekly! Today, we're diving deep into the world of data streams and job schedulers, exploring the intricacies of load balancing with Apache Kafka and designing robust distributed systems.

Grab your favorite beverage, settle in, and let's get learning!

🔦 Spotlight

📢 Apache Kafka Load Balancing

At Agoda, Apache Kafka is crucial for managing huge data flows, streaming hundreds of terabytes daily. But, ensuring smooth data processing isn't always easy. Variations in processing loads and consumer rates can cause bottlenecks and delays, making it vital to balance the workload effectively.

Challenges in Load Balancing.

Different Hardware Performance: A private cloud setup means not all servers are equal. Some are faster, and some are slower, leading to uneven processing rates.

Uneven Message Workloads: Not all messages are created equal. Some need more processing time than others, like those involving complex database queries or third-party services.

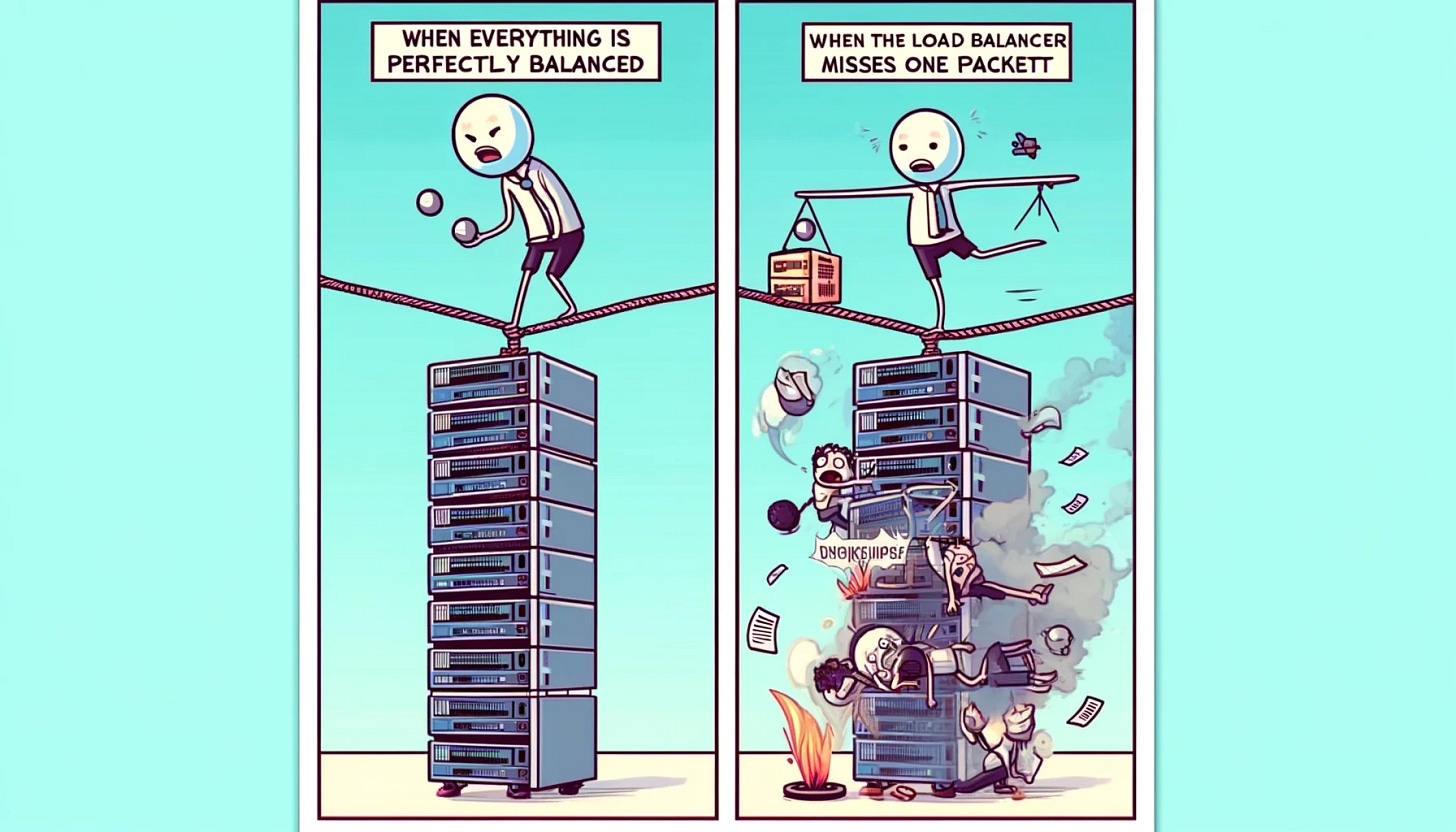

Solutions.

Static Solutions:

Uniform Hardware: Use the same type of hardware across deployments.

Weighted Load Balancing: Assign more work to faster servers.

Dynamic Solutions:

Lag-aware Producers: Adjust message production based on the current workload, sending fewer messages to busy servers.

Lag-aware Consumers: Let busy consumers temporarily stop processing to trigger a rebalance.

Real-world Results: By implementing these dynamic load-balancing strategies, they cut resource use by 50% while keeping systems running smoothly.

You can further explore the exact algorithms used on their engineering blog.

Other interesting reads

☕️ Tea Time

News you might have missed

Everyone’s hosting AI developer contests these days to gather data on potential use cases. Here are some active competitions worth a look.

Who’s Hiring?

LinkedIn says over 13K job openings for software engineers have been published in the last week.

Here are some notable mentions.

Flipkart has listed 50+ tech openings for their India office.

As per the latest trends, an SDE 2 with 2.5 YoE was offered a 28 LPA base salary.

Uber has over 40 open engineering roles across their Bangalore and Hyderabad offices.

Multiple recent offers on Leetcode indicate that Uber is offering a base salary of 40-45 LPA (including PF) for their SDE 2 position.

🚀 Power Up

Let’s focus on a question that recently appeared in a Flipkart interview.

Designing a Distributed Job Scheduler

This involves creating a system that can handle the scheduling, executing, and monitoring tasks across multiple machines.

Key Requirements

Scalability: The scheduler must handle an increasing number of jobs and machines.

Fault Tolerance: It should continue to operate even if some machines fail.

Fairness and Prioritization: Jobs should be scheduled based on priority and resource availability.

Resource Management: Efficiently allocate resources (CPU, memory) to jobs.

Monitoring and Logging: Track the status of jobs and log their execution details.

High-Level Design

Job Submission Service: Provides an API for users to submit jobs, specifying parameters such as job type, priority, and required resources.

Scheduler: The core component that decides when and where jobs should run. It maintains a queue of jobs and allocates them to available machines based on current load and job requirements.

Worker Nodes: Machines that execute the jobs. Each worker node registers with the scheduler and periodically sends heartbeats to indicate it's alive and provide status updates.

Job Execution Service: Runs on each worker node to manage job execution. It handles starting, stopping, monitoring jobs, and reporting results to the scheduler.

Database: Stores job metadata, status, logs, and system state information. It should be a highly available and scalable database, like Cassandra, or a distributed SQL database.

Load Balancer: Distributes incoming job submissions to ensure no single component is overwhelmed.

Monitoring and Alerting: Tools like Prometheus and Grafana for monitoring system health, job statuses, and resource utilization, and setting up alerts for anomalies.

Simplified Workflow

Submit a job.

Place the submitted job in a queue based on priority.

A scheduler picks up a machine (worker node) with enough resources to run the job.

The selected machine executes the job using the Job Execution Service.

These machines periodically send job status updates to the scheduler.

Upon completion, the job's status and logs are updated in the database.

📨 Post Credits

And that’s a wrap for this episode!

Thanks for tuning in, and don’t forget to subscribe for your weekly dose of tech insights and industry updates.

Until next time, keep coding and stay awesome!

Fin.